Fine-tuning Gemini to fit business needs

Introduction

Fine-tuning Gemini involves customizing a pre-trained model to better suit specific tasks or domains. This process leverages the vast amount of general knowledge the model has already acquired during its initial training on diverse datasets. Fine-tuning allows the model to adjust its parameters and learn from a smaller, more specific dataset that is relevant to the particular use case.

Why should we fine-tune?

- Specialized Knowledge: By training the model with data related to a particular topic or industry, it becomes smarter and more precise in that area.

- Less Data Needed: Fine-tuning needs fewer data because the model has already learned a lot from its initial training, unlike starting from scratch.

- Saving Time and Money: Fine-tuning is faster and cheaper than creating a new model from the beginning, making it more practical for different uses.

- Better Performance: Fine-tuning makes the model work better for specific tasks, so it gives more accurate and helpful results.

Gathering data

Gathering data is one of the most important steps in fine-tuning a model. The quality and relevance of the data used for fine-tuning directly impact the model's performance. When collecting data, it is crucial to ensure that it is clean, accurate, and representative of the task or domain the model is being tailored for. This process often involves curating datasets from various sources, cleaning the data to remove any errors or inconsistencies, and ensuring that it covers the necessary aspects of the problem.

Garbage In, Garbage Out

A key principle to remember during data gathering is "garbage in, garbage out." This means that if the data used for fine-tuning is poor quality or irrelevant, the model's performance will suffer. The model can only be as good as the data it learns from. Therefore, investing time and effort into collecting high-quality, relevant data is essential for achieving the best results from fine-tuning. By ensuring that the data is clean and appropriate, you set a solid foundation for a well-performing and reliable model.

Google allows us to create datasets using Google Sheets, making it easier to collaborate on dataset creation. At the time of writing this article, you need at least 20 examples to start the training process. However, Google recommends using 100-500 examples for better results.

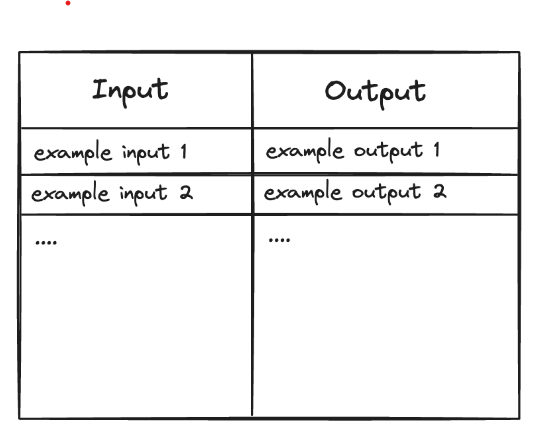

The dataset should follow the following format

Start fine-tuning

To get started, you need to sign in to Google AI studio (https://ai.google.dev/aistudio) where we will train the Gemini model.

Once logged in, go to the "New Tuned Model" section and import the Google Sheet where your dataset is stored.

After importing the dataset, select the base model you want to use. If you need to adjust the advanced settings, here is a brief explanation of each option.

- Tuning epochs - Epochs refers to the number of times the model trains on each example. Too few epochs might result in underfitting, where the model hasn’t learned enough from the data. Too many epochs can lead to overfitting, where the model learns the training data too well, including noise and outliers, making it perform poorly on new, unseen data.

- Learning rate multiplier - The learning rate multiplier adjusts the rate at which the model's parameters (weights and biases) are updated during training. The learning rate multiplier specifically scales the base learning rate, affecting how quickly or slowly the model learns from the data. A higher learning rate can speed up the training process, but it risks overshooting the optimal parameter values. A lower learning rate ensures more precise adjustments but can make the training process slower.

- Batch size - Batch size refers to the number of training examples used in one iteration of updating the model's parameters. Using smaller batch sizes makes the training process more dynamic and allows for more frequent updates to the model's parameters. Larger batch sizes provide more stable and accurate estimates of the gradient, leading to smoother updates. However, this requires more memory and computational resources and can slow down the convergence rate.

Finally, click the “Tune” button to start the fine-tuning process. Once it finishes, you'll see a Loss/Epoch graph in the model results. This graph shows how much the model's predictions deviate from the examples after each epoch. Ideally, you want to see this graph gradually decrease, indicating that the model is improving over each epoch.

Conclusion

Fine-tuning an OpenAI model is a powerful way to tailor it to specific tasks and improve its performance. By gathering high-quality data, understanding key concepts like epochs, learning rate multipliers, and batch sizes, you can effectively customize the model to meet your needs. Remember, the quality of the input data is crucial, as "garbage in, garbage out" applies. With careful preparation and tuning, you can achieve a model that delivers accurate and reliable results for your particular application.